之前教给大家怎样爬取彼岸网美女图片,不知道有多少小伙伴没有忍住多爬了几张图片导致自己的ip被封呢

这里教大家怎样搭建ip池,妈妈再也不我的ip被封了

首先有请今天的受害对象

快代理:http://www.xiladaili.com/

简单分析一下,我们要其中的ip以及端口号port这两个数据,而这每个数据前面都有自己特有的data-title,也就是说可以用re来匹配,确定了用什么模块来解析数据,那就开干!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| def get_page(url, headers):

response = requests.get(url, headers=headers)

response.encoding = 'utf-8'

if response.status_code == 200:

return response.text

return None

def main():

for i in range(1, 5):

url = f'https://www.kuaidaili.com/free/inha/{i}/'

headers = {

'User-Agent': 'Fiddler/5.0.20204.45441 (.NET 4.8; WinNT 10.0.19042.0; zh-CN; 8xAMD64; Auto Update; Full Instance; Extensions: APITesting, AutoSaveExt, EventLog, FiddlerOrchestraAddon, HostsFile, RulesTab2, SAZClipboardFactory, SimpleFilter, Timeline)'

}

html = get_page(url, headers)

if __name__ == '__main__':

main()

|

因为我们爬取的是免费的代理,免费代理的存活率很低,所以要用爬取大量数据来保障我们的有效代理的数量,这里我们进行翻页处理,

这个网站的第二页 第三页的地址为:

https://www.kuaidaili.com/free/inha/2/

https://www.kuaidaili.com/free/inha/3/

可以看到是后面的数字改变了,把数字换成1,网页也可以访问成功,那么就可以用for循环来改变地址后面的数字,以达到翻页的效果,如上面的代码所示

获取到网页信息后用re模块来匹配我们想要的数据

1

2

3

4

5

6

7

8

9

10

| def analysis(html):

agent = []

pattern_ip = re.compile('<td data-title="IP">(.*?)</td>')

pattern_port = re.compile('<td data-title="PORT">(.*?)</td>')

ip_list = re.findall(pattern_ip, html)

port_list = re.findall(pattern_port, html)

for i in range(len(ip_list) - 1):

age = ip_list[i] + ':' + port_list[i]

agent.append(age)

return agent

|

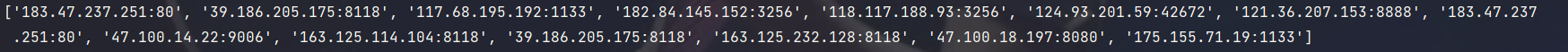

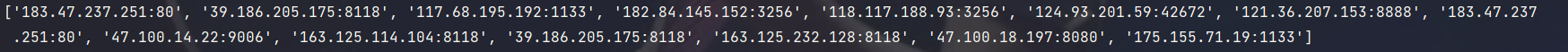

用两次正则分别来匹配数据,在用for循环将这两个数据用 : 来连接

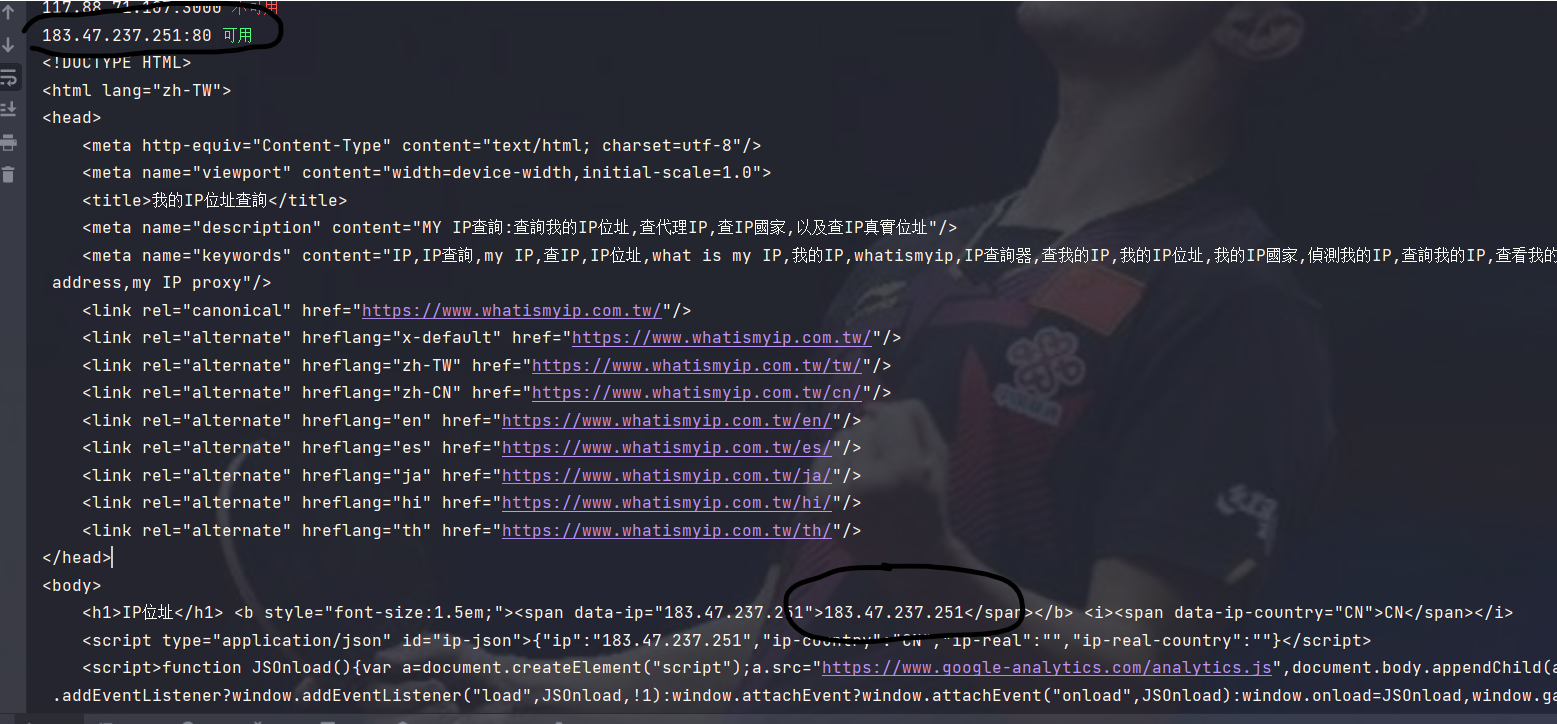

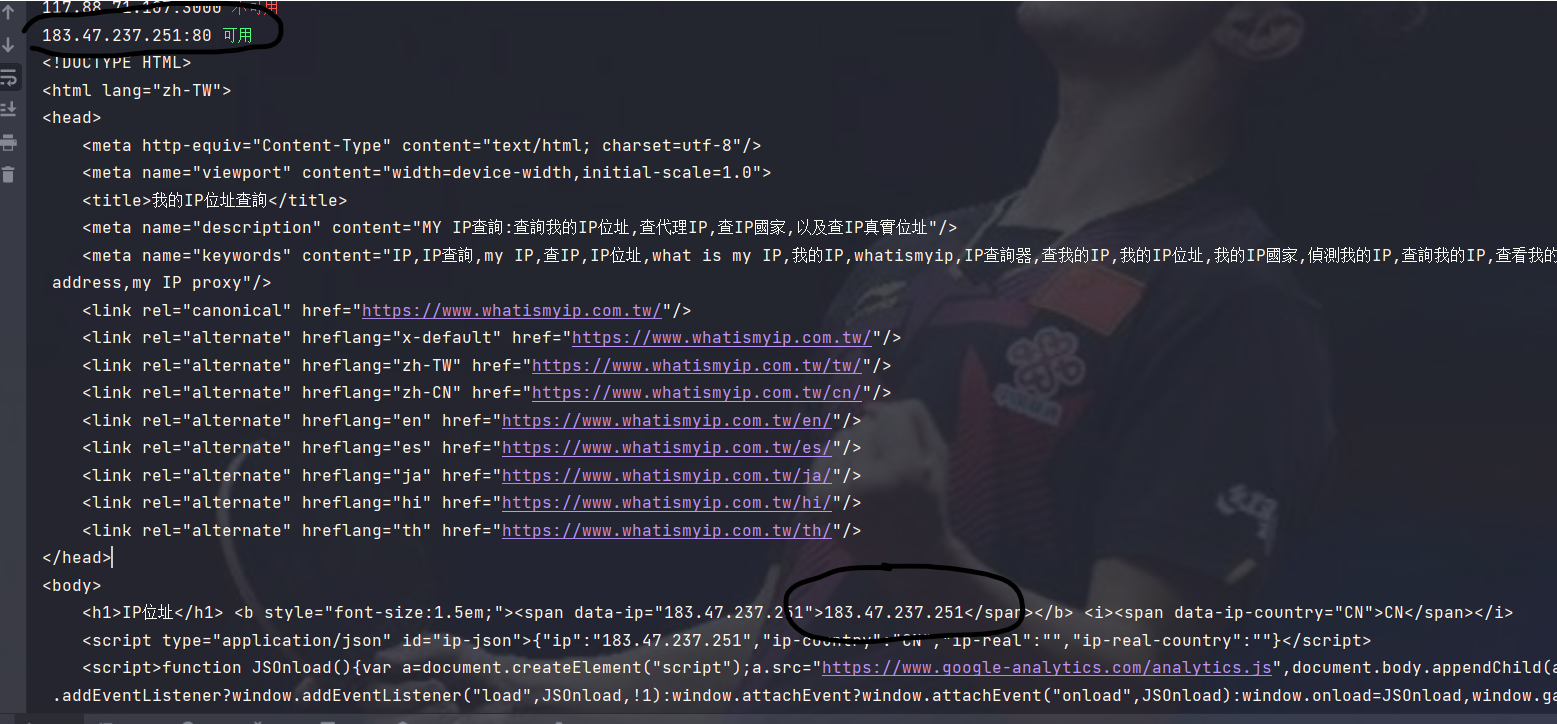

最后再检测一下这些ip是否可用,可以用这个网站来测试:http://www.whatismyip.com.tw/

他会返回访问所用的ip地址,为了更直观的看到结果。我用了命令行特殊显示效果\033,以及将得到的页面打印出来

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| def tes(agent):

url = 'http://www.whatismyip.com.tw/'

headers = {

'User-Agent': 'Fiddler/5.0.20204.45441 (.NET 4.8; WinNT 10.0.19042.0; zh-CN; 8xAMD64; Auto Update; Full Instance; Extensions: APITesting, AutoSaveExt, EventLog, FiddlerOrchestraAddon, HostsFile, RulesTab2, SAZClipboardFactory, SimpleFilter, Timeline)'

}

for i in agent:

proxies = {

'http': 'http://' + i

}

try:

response = requests.get(url, headers=headers, proxies=proxies)

if response.status_code == 200:

print(i, '\033[32m可用\033[0m')

print(response.text)

with open('ip.txt', 'a', encoding='utf-8') as f:

f.write(i + '\n')

except:

print(i, '\033[31m不可用\033[0m')

|

代理的http和https设置一定要和所访问地址的http相同,不然会无法使用代理,直接用你自己的ip进行访问

程序运行后得到可用的ip, 可以看到网页检测到的ip和我们使用的ip相同

全部代码如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

| import re

import requests

def get_page(url, headers):

response = requests.get(url, headers=headers)

response.encoding = 'utf-8'

if response.status_code == 200:

return response.text

return None

def analysis(html):

agent = []

pattern_ip = re.compile('<td data-title="IP">(.*?)</td>')

pattern_port = re.compile('<td data-title="PORT">(.*?)</td>')

ip_list = re.findall(pattern_ip, html)

port_list = re.findall(pattern_port, html)

for i in range(len(ip_list) - 1):

age = ip_list[i] + ':' + port_list[i]

agent.append(age)

print(agent)

return agent

def tes(agent):

url = 'http://www.whatismyip.com.tw/'

headers = {

'User-Agent': 'Fiddler/5.0.20204.45441 (.NET 4.8; WinNT 10.0.19042.0; zh-CN; 8xAMD64; Auto Update; Full Instance; Extensions: APITesting, AutoSaveExt, EventLog, FiddlerOrchestraAddon, HostsFile, RulesTab2, SAZClipboardFactory, SimpleFilter, Timeline)'

}

for i in agent:

proxies = {

'http': 'http://' + i

}

try:

response = requests.get(url, headers=headers, proxies=proxies)

if response.status_code == 200:

print(i, '\033[32m可用\033[0m')

print(response.text)

with open('ip.txt', 'a', encoding='utf-8') as f:

f.write(i + '\n')

except:

print(i, '\033[31m不可用\033[0m')

def main():

for i in range(2, 5):

url = f'https://www.kuaidaili.com/free/inha/{i}/'

headers = {

'User-Agent': 'Fiddler/5.0.20204.45441 (.NET 4.8; WinNT 10.0.19042.0; zh-CN; 8xAMD64; Auto Update; Full Instance; Extensions: APITesting, AutoSaveExt, EventLog, FiddlerOrchestraAddon, HostsFile, RulesTab2, SAZClipboardFactory, SimpleFilter, Timeline)'

}

html = get_page(url, headers)

agent = analysis(html)

tes(agent)

if __name__ == '__main__':

main()

|